Skip The Line

i.MX 95 Verdin

Evaluation Kit

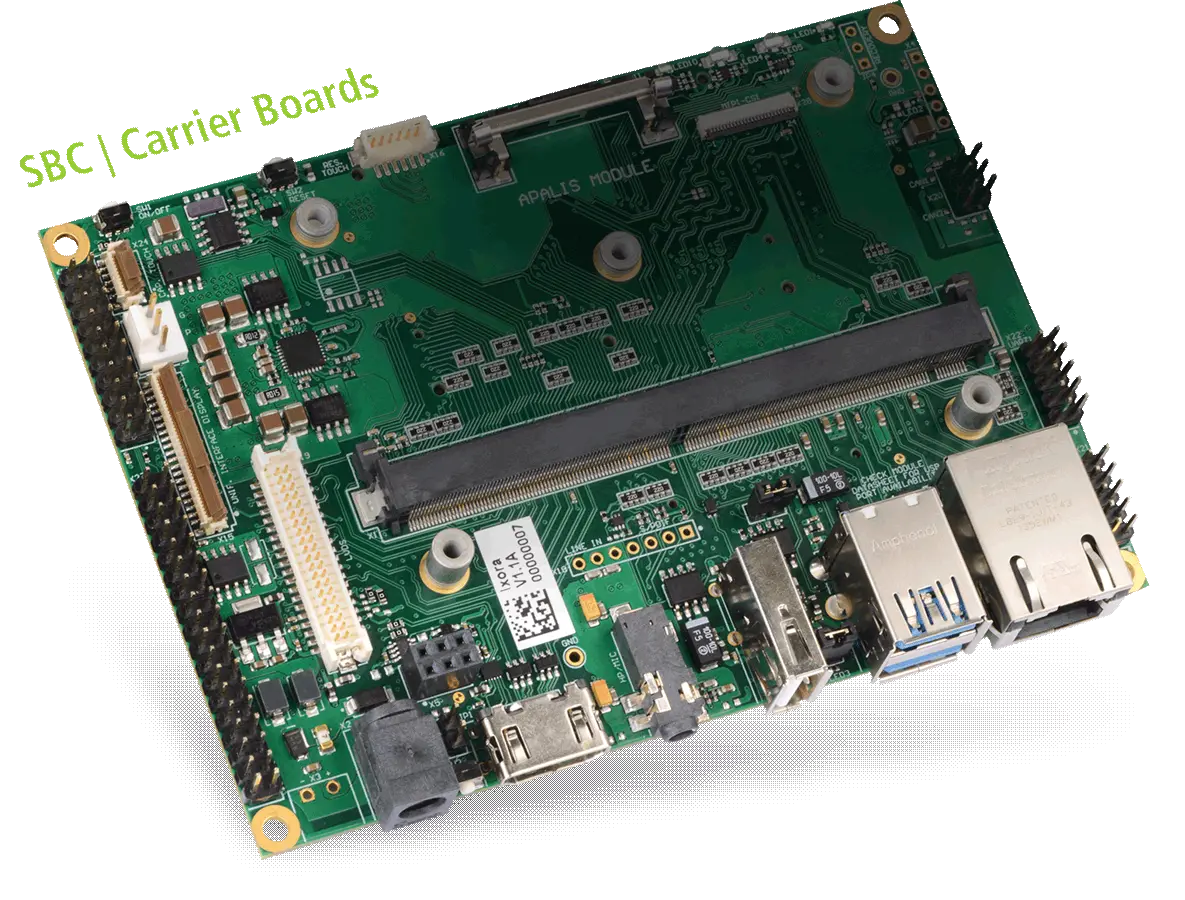

Accelerating Next-Gen Edge AI, Automotive, Industrial and Medical Applications. The Verdin EVK comes with features beyond the typical offerings of evaluation boards. It provides ample room for customization and scalability, making it a perfect fit for a wide array of applications.

Learn More Apply for Early Access

Embedded Computing Made Easy

Toradex provides strong integration between hardware and software to make modern product development fast and easy. Long-term commitment, an integrated DevOps Platform and free premium support simplify product maintenance and lower the cost of ownership.

How we make it easy Why Toradex Our Success Stories

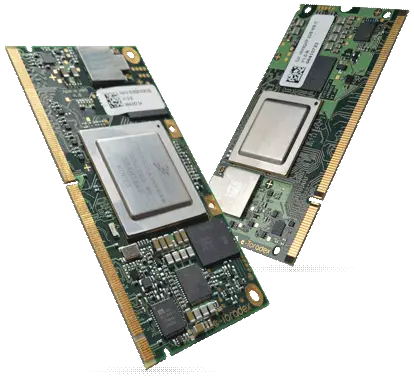

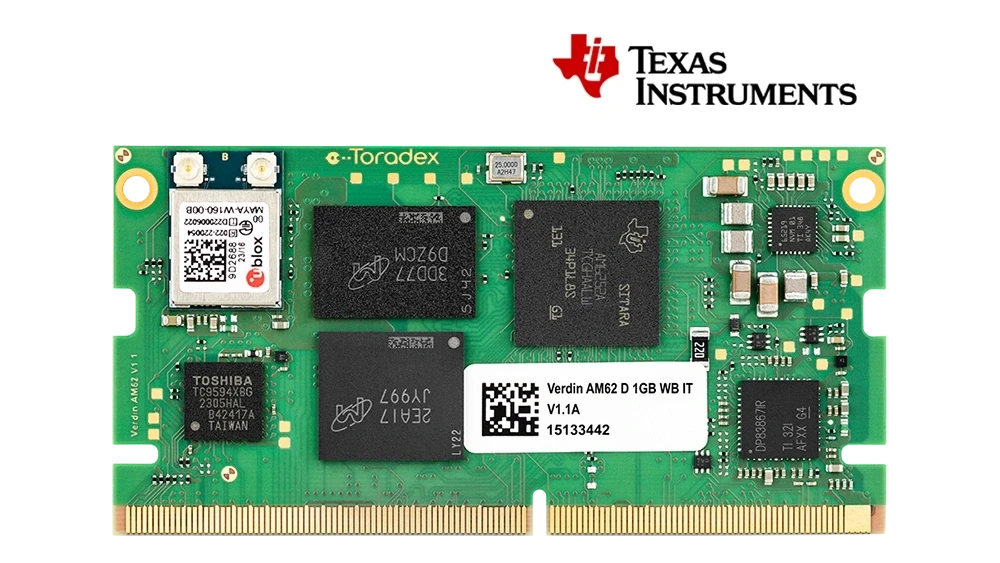

Verdin AM62

System on Module

Verdin AM62 with the TI Sitara AM62x Processor is an attractive entry point into the Verdin ecosystem, providing a cost-optimized platform pin compatible with the complete Verdin Family. As with all Toradex System on Modules, Verdin AM62 comes with excellent support, extensive documentation, and maintained Embedded Linux.

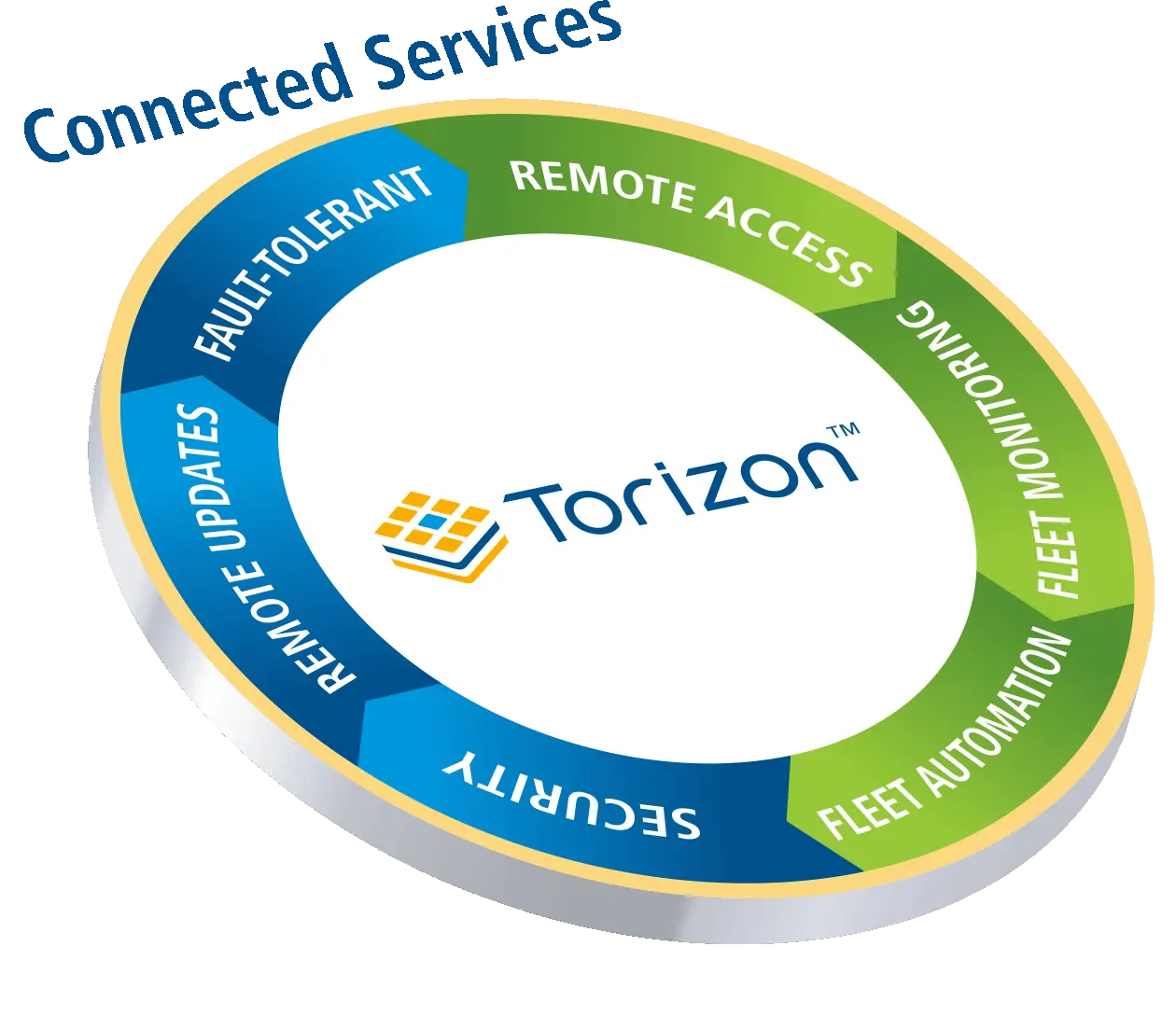

Torizon can further lower your time to market and simplify maintenance, increasing your invocation velocity and reducing your life time costs.

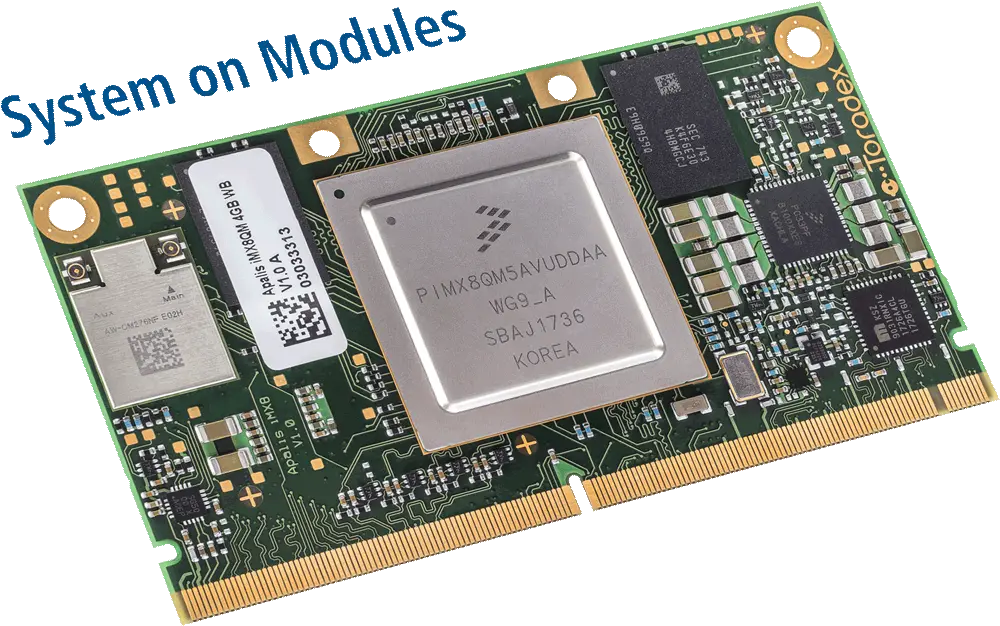

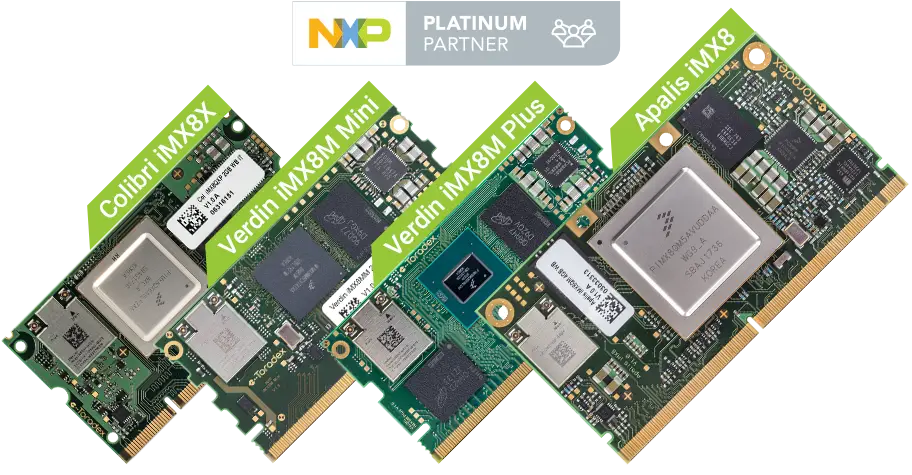

NXP® i.MX 8

System on Modules

The NXP i.MX 8 Series processors offer fast multi-OS platform deployment with advanced, full-chip hardware virtualization and domain protection.

Apalis iMX8 Verdin iMX8M Plus Verdin iMX8M Mini Colibri iMX8X

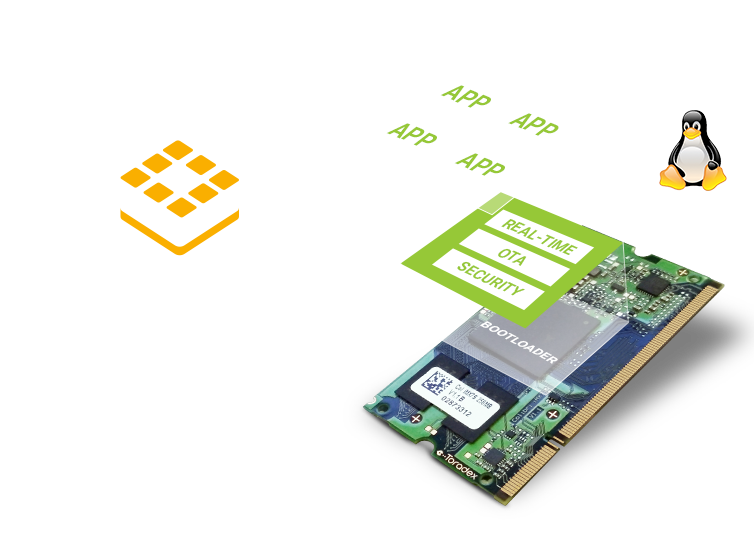

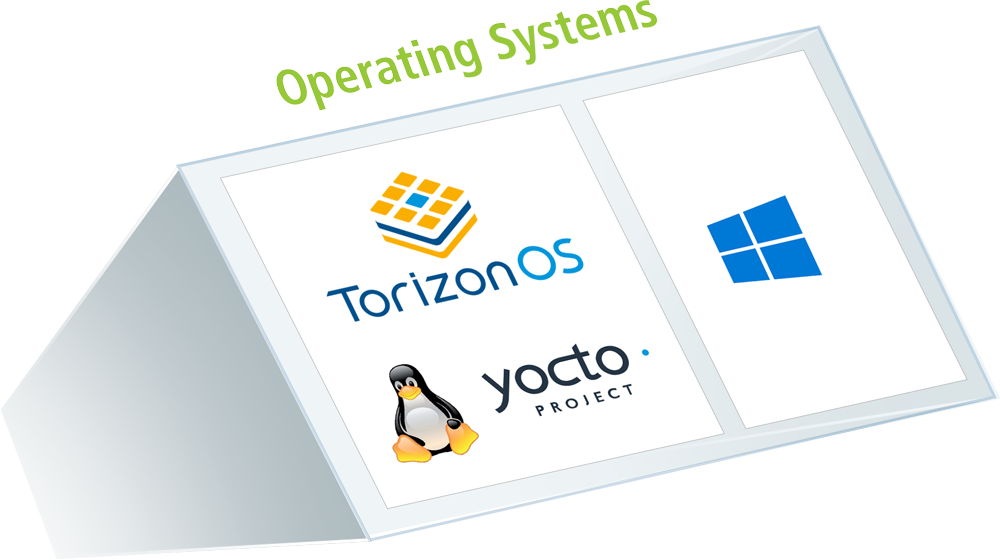

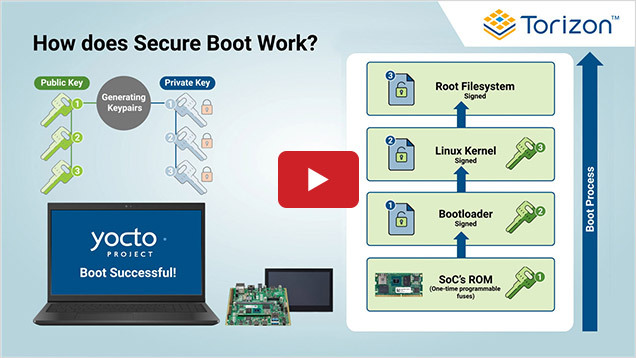

Simplifying the Development and Operation of Linux IoT Devices

Torizon makes developers more productive and helps them create easy-to-maintain products. Integrated over-the-air updates and device monitoring allow you to ship earlier, patch bugs in the field, deliver new features and detect issues faster.

Learn More Free Consultation Sign In